Gain Control over ChatGPT with Cisco Umbrella

C H A T G P T§

The recent surge of generative AI, such as Large Language Models (LLMs) and the biggest elephant in the room right now – ChatGPT, has had a significant impact on various industries, including the field of cybersecurity.

LLMs have shown great potential in improving productivity, automating and speeding up dull tasks – but they also present novel security challenges and concerns.

The rapid advancement of LLMs can be daunting, considering the lack of regulation and oversight surrounding their development and deployment, leaving little room for measures to ensure responsible and ethical use.

The rapid advancement of LLMs can be daunting, considering the lack of regulation and oversight surrounding their development and deployment, leaving little room for measures to ensure responsible and ethical use.

The dangers of LLMs in the cybersecurity space, according to ChatGPT:

- Malicious Use: LLMs can generate deceptive content for phishing and social engineering attacks.

- Fake Identities and Content: LLMs create realistic fake personas and generate misleading information.

- Automated Attacks: LLMs automate cyberattacks like brute-force attempts and vulnerability scanning.

- Exploiting System Vulnerabilities: LLMs identify and exploit system weaknesses to bypass security measures.

- Data Breaches: Unauthorized LLM access can lead to breaches and expose sensitive information.

- Privacy Concerns: LLMs processing personal data raise privacy and surveillance issues.

We are already seeing incidents of inadvertent data leaks; companies (most recently Samsung) have implemented strict bans on ChatGPT usage. These are in response to employees unintentionally exposing sensitive data while seeking assistance from ChatGPT to expedite and automate tasks.

You might be thinking the following:

We want our employees to tap into these AI systems for a productivity boost, but we also need to ensure they don’t accidentally leak sensitive enterprise or customer data. How can we find the right balance?

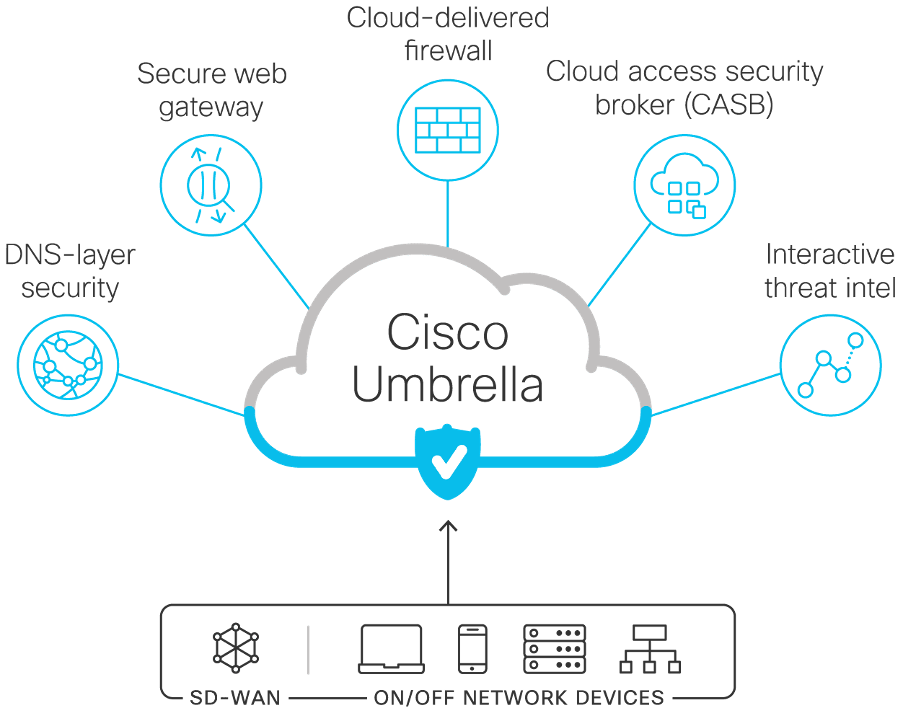

This is where Cisco Umbrella comes to the rescue!

To get a grip on emerging AIs early, it is essential to implement Data Loss Prevention (DLP) and application control measures from the start.

These tools enable admins to identify organisational usage of AI tools, assess associated data security risks, determine whether they should be allowed or blocked, and enforce appropriate permissions.

Application Discovery

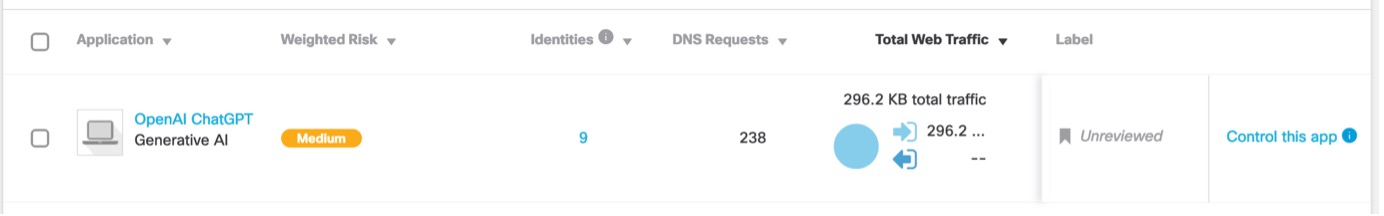

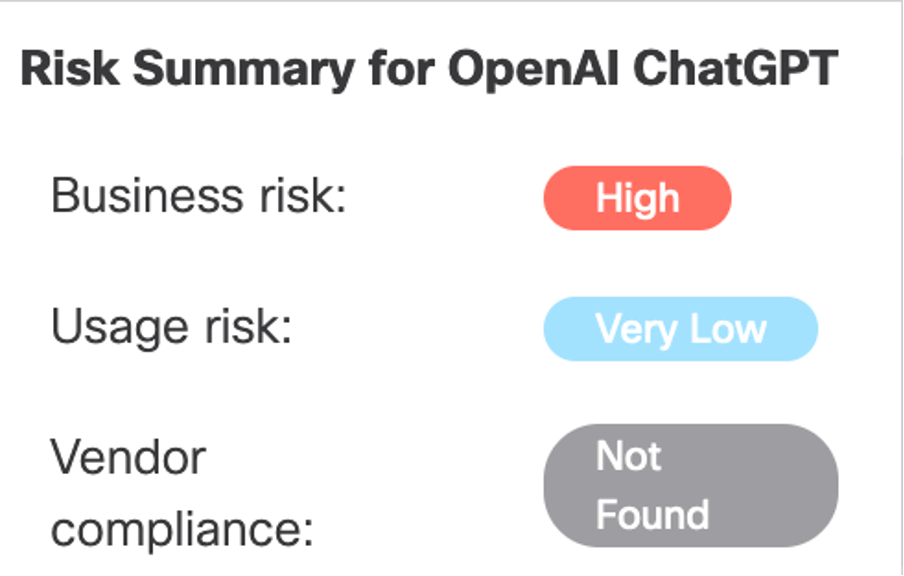

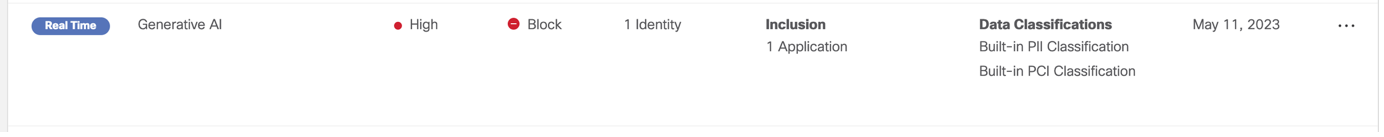

Within the Application Discovery dashboard, Umbrella categorises ChatGPT as a high business risk due to the potential ease with which it can lead to inadvertent leakage of corporate intellectual property and other sensitive information.

Image: App Discovery for ChatGPT

Image: App Discovery for ChatGPT

Umbrella admins have the capability to monitor its usage, including identifying users, tracking frequency, and determining the locations from which it is accessed.

Umbrella’s Data Loss Prevention (DLP) Solution

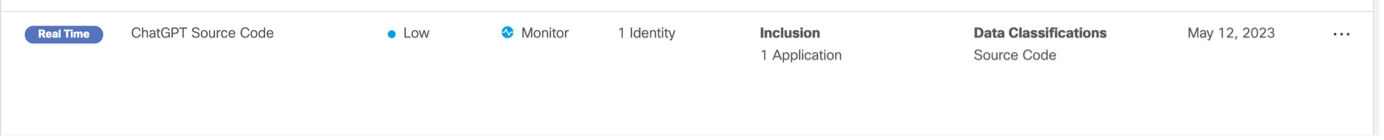

You can implement a DLP ‘monitor’ rule tailored to the most critical data classifications from the policy management dashboard, allowing for enhanced event identification, volume tracking, and detailed analysis.

Umbrella DLP rule to monitor ChatGPT source code and traffic

Now, it’s all well and good to monitor the traffic, but it’s time to decide whether we want to allow or block it to maintain full control.

DLP rule for detecting sensitive information within ChatGPT

When executed, this will set up a trigger that will block and send alerts of any requests when PCI or PII information has been detected within ChatGPT, such as credit card numbers and expiry dates.

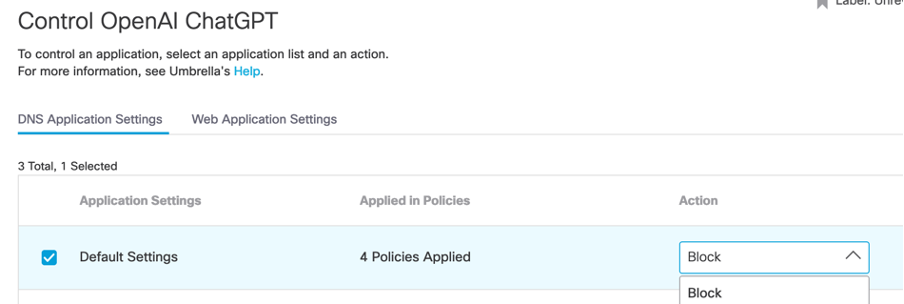

Alternatively, we can also do this within application discovery, which offers a simple allow or block action:

App Discovery Ruleset

Umbrella’s Secure Web Gateway (SWG) Solution

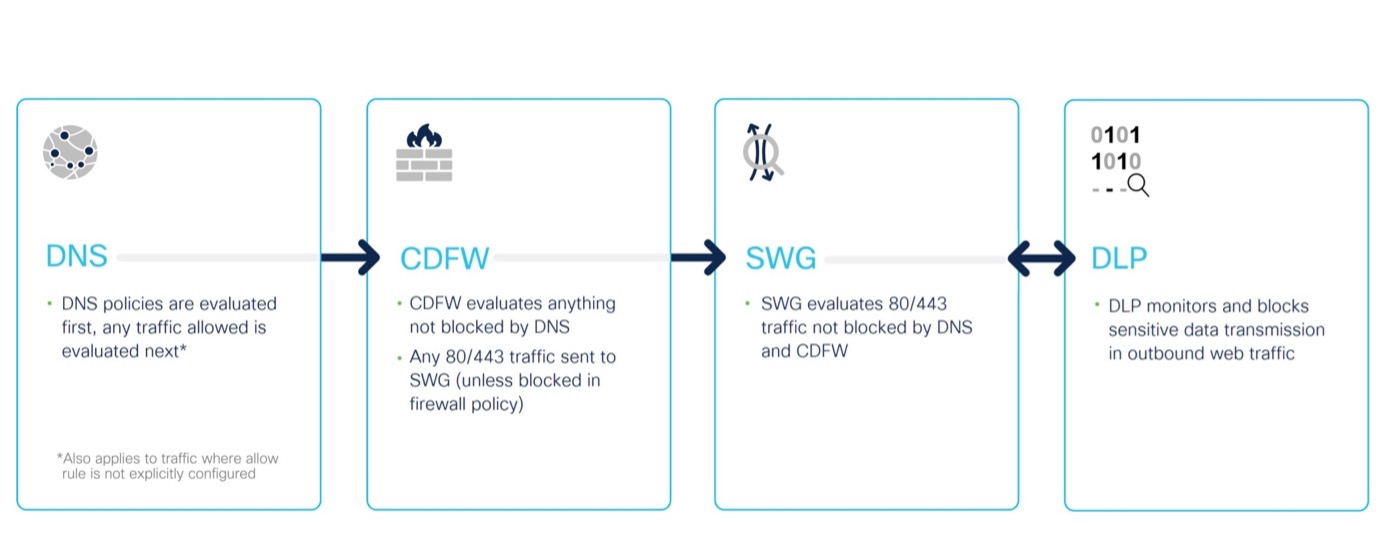

We can also block the generative AI category through SWG as part of the Secure Internet Gateway (SIG) platform. SWG differs from the standard DNS policy, including URL layer filtering and file inspection/sandboxing. If a DNS request is permitted through the DNS and CDFW layer, SWG will evaluate it.

Evaluation Steps for Traffic to Umbrella

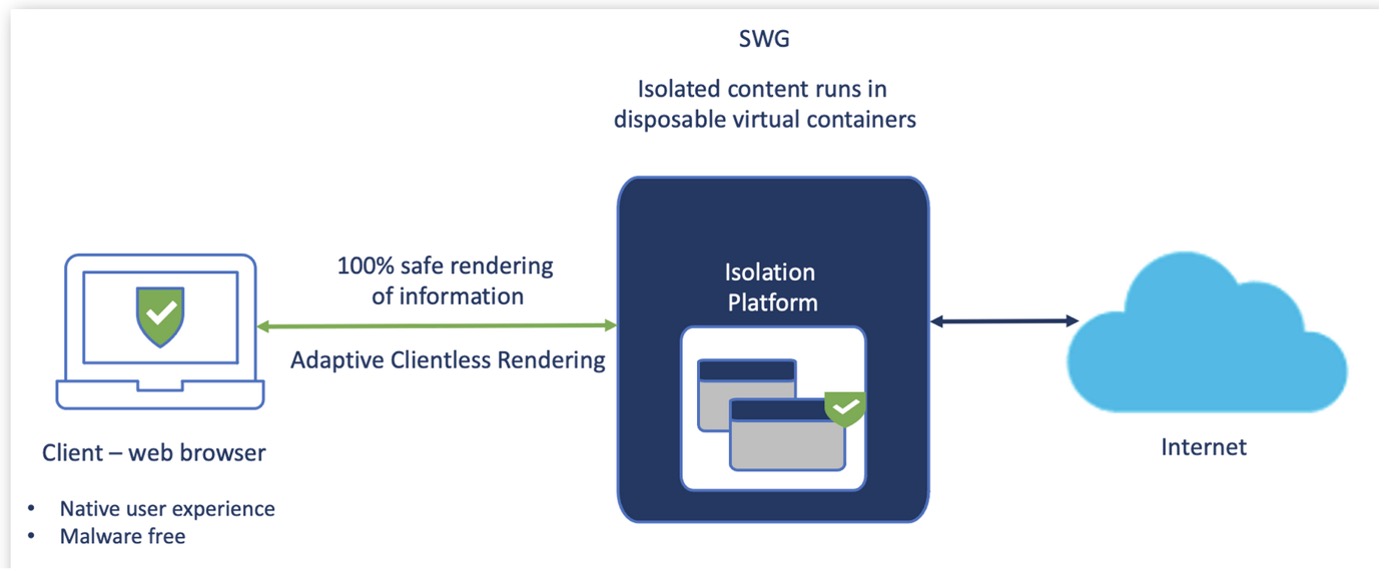

Another feature of SWG is remote browser isolation (RBI).

RBI protects the expanded attack surface without endpoint agents or browser plug-ins needed on the client device.

Simply set up a Web Policy that can include isolation of the following:

Security Categories:

- Malware, Command and Control Callbacks, Phishing Attacks and Potentially Harmful.

Applications:

- Cloud Storage, Collaboration, Office Productivity and Social Media

With OpenAI introducing Whisper APIs, developers can now create bespoke applications that interact with these language models. However, there is a potential risk of malicious actors setting up applications with malware designed to compromise the security of the client’s device from cloud storage and collaboration platforms.

Umbrella’s RBI

The remote browser operates in a separate cloud container, reducing the attack surface. It renders content in the user’s local browser without affecting the user experience.

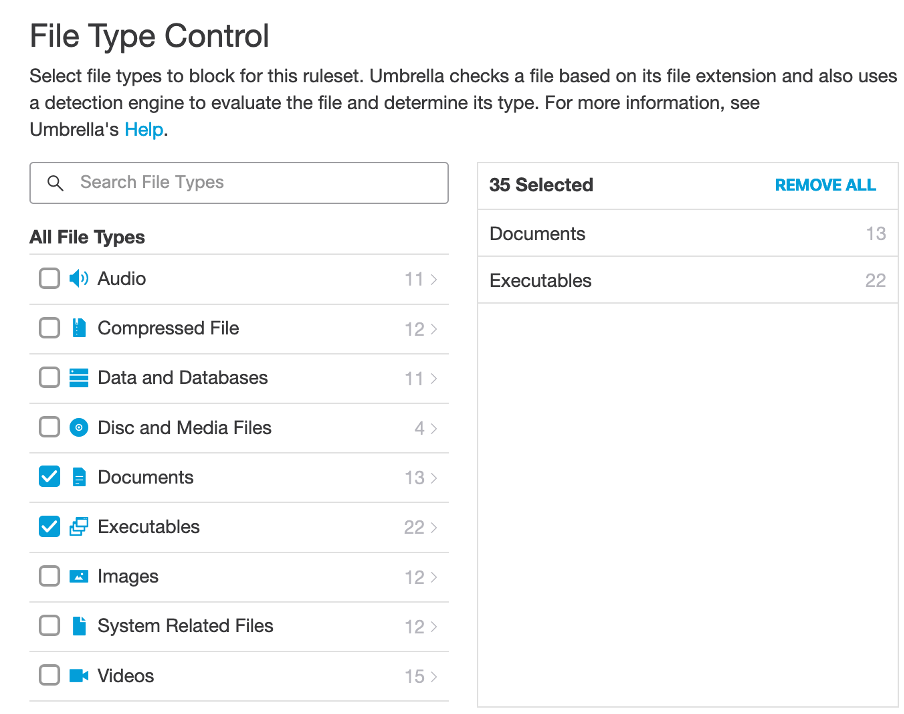

File Type Analysis

Additionally, a bespoke app could utilise OpenAI’s Whisper API for file uploads and downloads.

Implementing measures like filtering file extensions reduces the risk of introducing malicious code into the client environment.

Conclusion

There’s no question that generative AI tools will continue to grow, with ongoing scrutiny of their risks and benefits.

In the end, rather than outright blocking it (unless you desire peace of mind in the *unlikely* event of a singularity approaching…!), we can leverage Umbrella’s tools to enhance employee productivity without compromising data security.

Interested in Cisco Umbrella?

If you’ve found this insightful and would like to find out more about our Cisco Umbrella services, including deployment, integration and management – please get in touch:

[email protected] or call 0333 370 1353.

Need Advice?

If you need any advice on this issue or any other cyber security subjects, please contact Protos Networks.

Email: [email protected]

Tel: 0333 370 1353